In today's fast-paced, technology-driven landscape, the allure of Artificial Intelligence (AI) is more evident than ever, and this is nowhere more pronounced than in the rise of Generative AI frameworks. These tools, equipped with the remarkable capability to produce new, innovative content based on pre-existing data, have carved out a significant place for themselves in a multitude of industries, from entertainment and content creation to finance and healthcare.

According to Gartner research, Many HR leaders are considering how to invest in accessing this new technology for their HR function. They are open to implementing generative AI such as ChatGPT with their existing provider at no cost (32%), with a new service provider (24%), by building their own solutions (24%) and by including it in their roadmap or as a future purchasing criterion (24%).

But as Winston Churchill said, "Where there is great power there is great responsibility." As business and HR leaders increasingly look to incorporate these powerful tools into their strategy and operational workflow, understanding the risks attached to them is not only important, but essential.

This comprehensive guide aims to delve deep into the nuances, complexities, risks, and implications that accompany the use of Generative AI. It also discusses Darwinbox’s strategy to mitigate these risks and use AI responsibly for its modern HRMS platform.

-

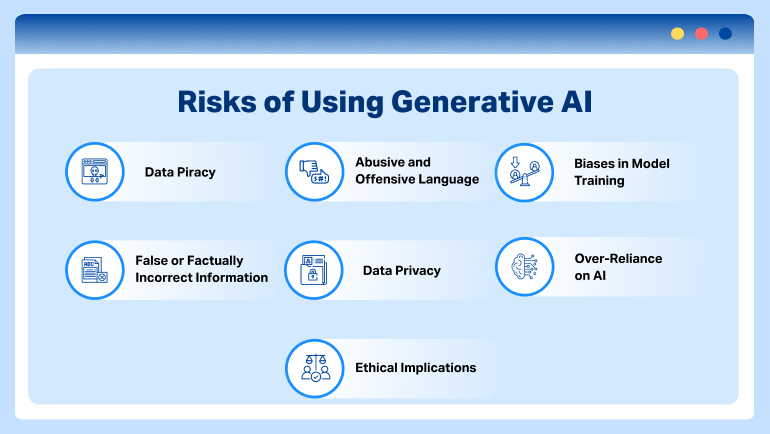

Data Piracy: The Silent Enemy

Generative AI operates on the backbone of data, consuming vast amounts of it to improve its proficiency and accuracy. The more data these models consume, the more effective and precise they become. However, this seemingly harmless trait involves a serious risk. These AI models can inadvertently reproduce copyrighted or proprietary content, thereby inviting issues related to data piracy.

For instance, a generative model designed to create original music compositions could unintentionally produce melodies that closely resemble existing, copyrighted compositions. The implications are far-reaching, including legal disputes, financial penalties, and even a crisis that could jeopardize a company's reputation.

Darwinbox’s approach to address this risk is twofold:

- We ensure that no copyrighted or patented data is used to adapt a generative AI model across domains.

- While prompting the model for a task, we make sure it cites the source while making references to any IP. Also, we regularly review the output manually to address potential deviations.

-

Abusive and Offensive Language: Treading a Minefield

Despite their advanced algorithms, AI systems are not immune to the risks of generating content that can be deemed offensive, harmful, or abusive. Historical incidents like the scandal surrounding Microsoft's Tay chatbot, which started posting highly inappropriate content, are a testament to this vulnerability. Without adequate checks and oversight, these AI tools can inadvertently reflect and even amplify the negative, harmful aspects of the data they're trained on, creating ethical and PR nightmares.

Darwinbox’s approach to address this risk:

To counter AI systems producing offensive content, Darwinbox uses a combination of technical and human measures. Apart from careful curation of training data sets, content filters screen for inappropriate outputs. There is also an additional, essential layer of human supervision, ensuring that AI-generated content stays within socially acceptable boundaries.

-

Biases in Model Training: The Unseen Hand

One of the more insidious challenges comes from the inherent biases present in training the data used to refine these models. If the data used for training is skewed or biased, the output will inevitably reflect those biases. This has been observed in facial recognition systems, where it can misidentify individuals from certain ethnic backgrounds at a higher rate. These biases can have severe ramifications, particularly when the technology is applied in sensitive sectors, such as criminal justice, healthcare, and functions like HR.

Darwinbox’s approach to address this risk:

To ensure real-world variability is captured, Darwinbox makes sure any biases in outputs are addressed. For instance, when a Job Description (JD) is generated on the product platform using our generative model, the prompt to the model is engineered to avoid a basic set of biases by default, such as gender, race, and ethnicity.

-

False or Factually Incorrect Information

The challenge of combating misinformation is a serious one. Generative AI models are capable of creating content or insight that, while seemingly credible and well-articulated, is far removed from factual accuracy. Consider a hypothetical scenario where an AI model erroneously reports financial news that a major tech company is about to face significant regulatory hurdles. The result could be widespread market volatility based on false information.

Darwinbox’s approach to address this risk:

Combating the dissemination of inaccurate information by AI models requires rigorous validation mechanisms. By integrating a multi-tiered verification process, where AI-generated content undergoes human expert review before dissemination, we can significantly reduce the risks of spreading falsehoods. At Darwinbox all AI models are trained on factually accurate, validated information, alongside retraining them periodically to avoid any factual errors.

-

Data Privacy: The Harmful Exposure

Inadvertently sending Personally Identifiable Information (PII) to AI models hosted online poses significant data privacy challenges and security risks. Since the models or the data may be hosted on servers that we don’t control, passing sensitive data like names, addresses, etc. into the model can lead to unauthorized access or data breaches.

Additionally, the model’s data usage policy may allow for the storage and analysis of submitted data for purposes like improving model performance, further complicating issues related to data ownership and consent.

Darwinbox’s approach to address this risk:

We strive to use AI models developed in-house and trained them rigorously to exercise more control over data storage and use, thereby eliminating security risks. This also means that our models are hyper-contextualized to our domain and achieve superior performance.

-

Over-Reliance on AI: Dependency Overdose

Over-dependence on AI systems is another significant risk. As businesses grow increasingly reliant on Generative AI for a wide range of tasks, they may find themselves trapped in a self-imposed limitation that stifles human creativity and critical thinking. This can lead to the formation of echo chambers where the AI continues to produce what's already known and popular, thereby quashing diversity and the potential for groundbreaking innovation.

Darwinbox’s approach to address this risk:

We make sure that AI augments and enhances human output, rather than replaces and overtakes it. For example, our Stack Ranking (Search-and-Match) model does not just parse and match JDs and Resumes (that frees up the recruiter’s time for strategic activities), it also provides crucial insights from a candidate’s profile that helps the recruiter use their expertise and take a well-informed decision.

-

Ethical Implications: A Pandora's Box

Generative AI's ability to create deepfakes — hyper-realistic but completely fabricated content — is a terrifying advancement. Such technology in the wrong hands can create far-reaching issues, ranging from fake news videos designed to manipulate public opinion, to falsified voice recordings for various malevolent schemes. The impact on trust and credibility at both the individual and institutional levels can be devastating.

Darwinbox’s approach to address this risk:

To counteract the ethical risks posed by deepfakes, we are using a multifaceted approach.

- Incorporating digital watermarking and leveraging AI-driven detection tools can serve as vital mechanisms to pinpoint and root out deceptive content.

- Establishing robust legal frameworks that specifically address the malicious deployment of fakes can act as a significant deterrent, setting a clear precedent for accountability.

- Fostering public awareness by enlightening the users about the intricacies of fakes and equipping them with skills to discern such fabrications. By doing this, we can cultivate a society less vulnerable to the manipulations of this potent technology.

Conclusion

Generative AI holds immense promise for shaping a future replete with incredible opportunities and advancements. However, they are not without risks. For business and HR leaders, a balanced approach that melds the undeniable capabilities of AI with human supervision, robust governance, and common sense can help navigate this complex landscape.

HR leaders planning to leverage generative AI should consider evaluating the platform innovation roadmap of their existing or prospective HR technology vendor(s). They should determine if the vendors’ generative AI solutions and use cases can meet the organization’s risk tolerance.

Darwinbox is built with AI at its core. We have invested heavily in AI to ensure its responsible use. Find out the benefits of AI in HR here. Schedule a demo to learn how Darwinbox drives real-world value for modern enterprises.

Speak Your Mind